基于TensorFlow 2.x的一些CNN模块/网络的实现

开源一些基于TensorFlow 2.x的CNN模块/网络的实现,可能不定时更新。仓库链接:TensorFlow-2-Implementations-of-CNN-Based-Networks

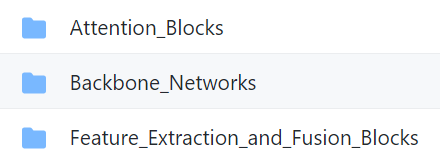

目前的实现包括:

Feature Extraction/Fusion Blocks

Atrous Convolutional Block for 1D (data points / sequences) or 2D inputs (images / feature maps), suggested by An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling

Receptive Field Block, from Receptive Field Block Net for Accurate and Fast Object Detection

Attention Blocks

Squeeze-and-Excitation Block (Kind of Channel Attention), from Squeeze-and-Excitation Networks

Convolutional Block Attention Module (CBAM), including Channel Attention Module and Spatial Attention Module, from CBAM: Convolutional Block Attention Module

Non-Local Block, including ‘Gaussian’, ‘Embedded Gaussian’, ‘Dot Product’ and ‘Concatenation’ modes, from Non-local Neural Networks

Dual Attention Module, including Channel Attention Module and Position Attention Module, from Dual Attention Network for Scene Segmentation

Backbone Networks

参考了以下文章/仓库中的一些代码实现,在此感谢:

[1] https://github.com/philipperemy/keras-tcn

[2] https://github.com/Baichenjia/Tensorflow-TCN/blob/master/tcn.py

[3] https://arxiv.org/pdf/1803.01271.pdf

[4] https://arxiv.org/pdf/1711.07767.pdf

[5] https://arxiv.org/abs/1709.01507

[6] https://github.com/kobiso/CBAM-tensorflow-slim/blob/master/nets/attention_module.py

[7] https://arxiv.org/abs/1807.06521

[8] https://arxiv.org/pdf/1711.07971.pdf

[9] https://github.com/titu1994/keras-non-local-nets/blob/master/non_local.py

[10] https://github.com/Tramac/Non-local-tensorflow/tree/master/non_local

[11] https://arxiv.org/pdf/1809.02983.pdf

[12] https://github.com/niecongchong/DANet-keras/blob/master/layers/attention.py

[13] https://github.com/okason97/DenseNet-Tensorflow2/blob/master/densenet/densenet.py

[14] https://arxiv.org/pdf/1608.06993.pdf

基于TensorFlow 2.x的一些CNN模块/网络的实现